Azul cloud cost optimization experts researched reasons companies struggle with cloud costs. After careful investigation, they identified six false but widely believed Java cloud cost optimization myths and produced the facts to counter each one.

Myth: All Java runtimes are the same.

Reality: Industry analysts and leading companies running modern cloud architectures agree that using a High-Performance Java Platform with JIT compilation improves Java application performance and reduces cloud waste.

It’s a common misconception that is costing you and your team millions.

Java is still one of the most popular languages for building web sites, mobile applications, and distributed data processing frameworks (source: Is Java Still Relevant Nowadays? Jetbrains, July 2024) …all the stuff you need to run digital businesses across the AdTech, FinTech, Gaming, E-commerce, High-Performance Trading, and Online Travel and Book industries. Azul’s State of Java Survey & Report 2023 also showed that Java has almost universal adoption among commercial enterprises.

And digital businesses run on the cloud. See where we’re going?

It’s fair to say that you are probably focused on what Java frameworks you are using…or what version you are on. You spend time thinking about feature velocity and how to get to the market the quickest time while delivering the best customer experience.

Or…maybe you are looking to deliver the best customer experience while maintaining a low cost of ownership for your Java estate.

Java is just Java, right? Nope.

Have you ever stopped and thought about what Java Virtual Machine you are on? Not all Java runtimes are the same. While most distributions of Java are just vanilla copies of OpenJDK – including distributions from Oracle, Temurin and Amazon Corretto – they are just vanilla packaging of OpenJDK.

Some JVMs offer great advantages. Your choice of JVM matters. Some JVMS are designed to enhance Java workloads to make them faster, easier to deploy, and use less compute.

We have found that the faster you can run your Java, the less compute it uses, and that lowers cloud waste. Did you know? There is a High-Performance Java Platform that is specifically optimized to improve application performance by making the code run faster – while reducing cloud waste.

Let’s take a deeper look at the problem

Most applications today are typically over-provisioned to handle peak loads. But why pay all that capex to provision enough hardware to cover your peak loads and then spend the opex to power and cool those machines as well as maintain them? Just let someone like Amazon, Microsoft or Google do that for you, and only pay for what you use as you do with your electricity bill.

This is the promise of cloud computing: a utility-based pricing model with lower bills to run your mission-critical enterprise applications.

Unfortunately, the reality often turns out to be different, and people find that moving to the cloud costs them more than hosting on-premises. How can this be and how can we address this?

Let’s look specifically at JVM-based applications using Java. However, many other languages, like Kotlin, Scala and Clojure, can also be compiled for the JVM.

Microservices are the modern approach to architecting cloud-based applications. Rather than developing a single, monolithic application, we break the application into discrete services that can be loosely coupled yet highly cohesive. In doing this, when one service becomes a bottleneck to performance, we can spin up new instances of that service, load balance usage and eliminate the bottleneck without needing to change other parts of the system.

This is where one of the core pieces of functionality of the JVM can lead to wasted cloud resources.

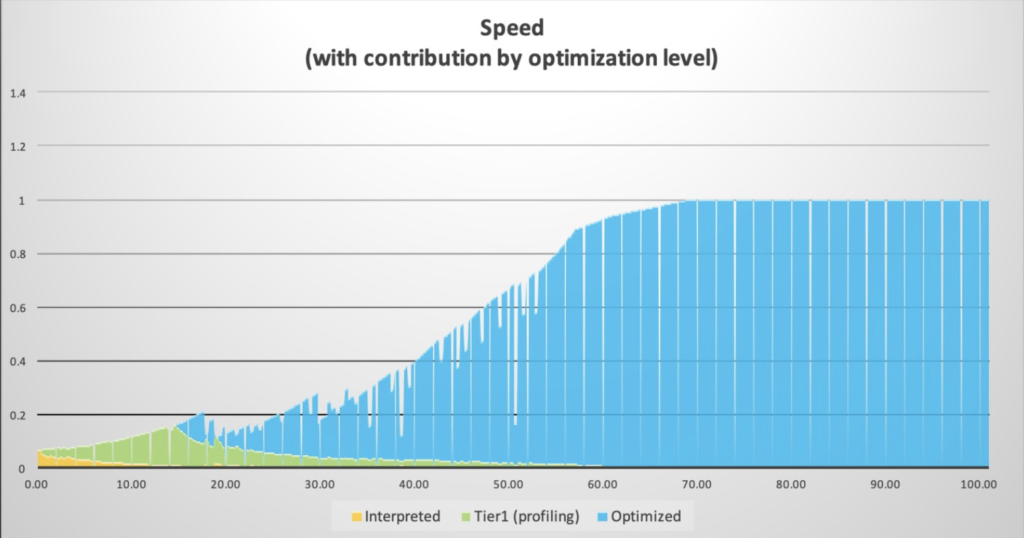

To deliver on the promise of “write once, run anywhere,” Java applications compile bytecodes, the instructions of a virtual machine, rather than a specific processor. When a Java application starts, the JVM profiles it and identifies frequently used code hot spots that can be compiled into native code. This just-in-time (JIT) compilation delivers excellent performance as the JVM knows precisely how the code is being used and can optimize it accordingly.

However, the time it takes for all the frequently used sections of code to be identified and compiled, which is actually a more complex, multistage process, can be longer than desired. This warmup time is not generally an issue for long-running processes like web or application servers. Microservices can start and stop frequently to dynamically respond to load variation. Waiting for a microservice to warm up before it can deliver full carrying capacity reduces the benefits of this approach.

A frequently used solution is to start multiple instances of a service and leave them running so that they are ready to deliver full performance immediately when required. This is obviously very wasteful and incurs unnecessary cloud infrastructure costs.

How can we solve this problem with AOT or JIT compilation?

One approach is to use ahead-of-time (AOT) compilation. Rather than using JIT compilation, all code is compiled directly to native instructions. This eliminates warmup entirely, and the application starts with the full level of performance available.

Although this sounds like the ideal solution, it comes with cost and limitations.

AOT compiles code without knowing how it will be used, limiting the potential for optimization. JIT compilation has profiling information that enables optimizations tailored precisely to the way the application is being used. Typically, this results insignificantly better overall performance.

For ephemeral microservices, so-called serverless computing, AOT delivers definite benefits. For any service that will run for at least a few minutes, JIT will result in better performance and, therefore, lower cloud computing costs.

| AOT Compilation | JIT Compilation |

|---|---|

| Class loading prevents method inlining | Can use aggressive method inlining |

| No runtime bytecode generation | Can use runtime bytecode generation |

| Reflection is complicated | Reflection is (relatively) simple |

| Unable to use speculative optimizations | Can use speculative optimizations |

| Overall performance will typically be lower | Overall performance will typically be higher |

| Full speed from the start | Requires warmup time |

| No CPU overhead to compile code at runtime | CPU overhead to compile code at runtime |

But although AOT offers a set of advantages regarding startup, the JIT compiler typically achieves beter performing code during runtime.

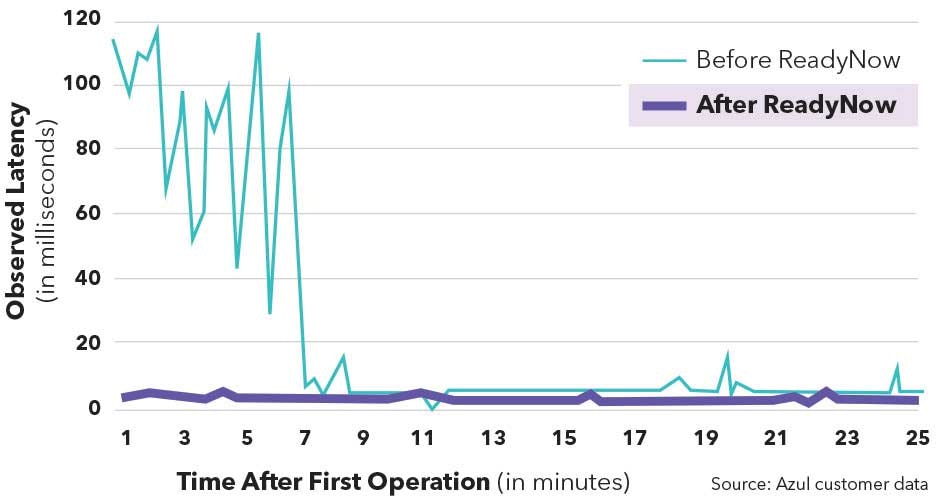

An alternative solution is ReadyNow warmup technology, part of its Platform Prime High-Performance Java Platform.

The problem, as we have seen, is that each time we start an instance of a microservice, the JVM must perform the same analysis to identify hot spots, gather profiling information and compile them to native code. This happens even when we have used that microservice in the same way many times before. With ReadyNow, the service is started and allowed to warm up in production using real-world requests, not simulated ones. When the service is fully warmed up (has reached its optimum level of performance), a profile is collected. This profile includes all the information required to obtain that level of performance: a list of hot spots, profiling data and even compiled code.

When the service needs to be started again, the profile is provided as part of the execution parameters. The profile ensures that when the JVM is ready to handle the first transaction, performance will be almost at the level it was when the profile was taken.

ReadyNow eliminates almost all warmup time, while retaining all the performance benefits of JIT compilation. This system provides complete flexibility since different profiles can be used for the same service, depending on when and where the service is in use. For example, the workload profile may be very different on a Monday morning than on a Friday afternoon. ReadyNow can store multiple profiles and select the appropriate one when required.

Now that JVM-based microservices can have minimal warmup time, there is no need to maintain a pool of services sitting idly in the background. This can significantly reduce cloud waste.

A performance-optimized JVM that also includes an alternative memory management system eliminates the typical latency for transactions. The JIT compilation system has also been improved to deliver higher throughput. Rather than reducing cloud waste, these simply reduce the cloud resources required to provide the same carrying capacity. The effect is to lower cloud costs even further.

High-Performance Java Platform with JIT compilation reduces cloud waste

Let’s look at an example of how this worked for a real customer. Supercell is a company that runs some of the biggest online multiplayer games in the world. For the recent release of Brawl Stars, it was experiencing delays when spinning up new servers as the JVMs were compiling the required code. By switching to Azul Platform Prime, and exploiting ReadyNow, it was able to deliver much more consistent load-carrying capacity, reducing game lag, and reducing CPU usage by 20 to 25% for the same workload.

How fast do you think your code can go? Check out our recent blog which benchmarks a High-Performance Java Platform against OpenJDK 8, 11, 17 and 21. How do you compare?

Clearly, a JVM that runs faster code means fewer cloud resources are needed so you can better leverage committed, discounted cloud spend – and even lower your cloud bill!