The Java platform is nearly 30 years old, yet consistently maintains its position in the top three most popular programming languages. One of the key reasons for this is the Java Virtual Machine (JVM). By abstracting away concerns like memory management and compiling code as it is running, the JVM can deliver internet-level scalability beyond the reach of other runtimes.

What also helps to keep Java so popular is the rate of evolution of the language, the libraries and the JVM. In 2019, OpenJDK (the open-source project for Java development) switched to a time-based rather than feature-based release schedule. We now have two new versions of Java each year rather than having to wait anywhere between two and four years.

With so many releases, it is not practical for distributions to offer extended maintenance and support for all versions. Only specific ones classified as long-term support (LTS) include this. All releases are suitable for use in production, but most enterprise users will opt only to deploy applications using an LTS JDK.

The current Java release is JDK 22, launched in March 2024. JDK 21, the last LTS released in September 2023, included some interesting new features that will make it attractive for applications that support large numbers of simultaneous users.

Introducing threads in Java

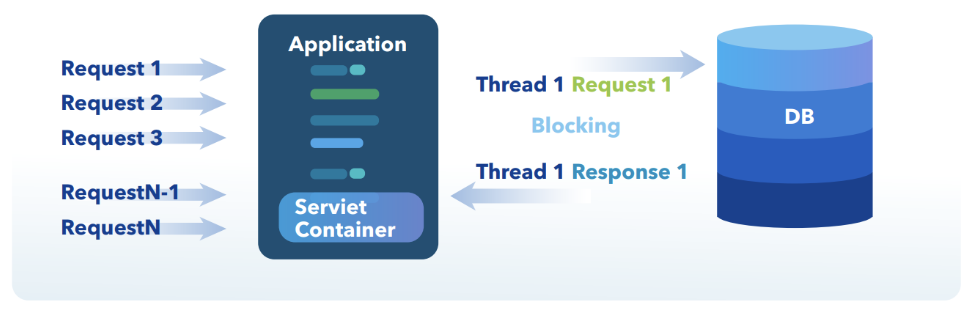

From the beginning, Java was a language that supported the idea of concurrent execution of tasks. Unlike languages like C and C++, which rely on external libraries for this support, Java has the concept of threads built into the language. Suppose you’re developing a web-based application that will support many simultaneous users. In that case, each user’s connection can be handled concurrently by allocating their own thread or threads to process the necessary transactions. All this is done independently, keeping each user’s data isolated from others. This is referred to as a thread-per-request (TPR) programming model.

While this is excellent for the developers of these types of applications, it does not come without some limitations. Linux, for example, is a hugely popular operating system (OS) that can handle all of the low-level aspects of threads and assign them to the available CPUs and cores of the hardware in use. Prior to JDK 21, all Java threads were mapped directly to OS threads, so the JVM did not need to handle the low-level aspects.

Limitations of Java threads

The drawback is scalability when handling hundreds of thousands (or more) of simultaneous connections. The memory requirements when using so many threads make it impractical to provision cost-effective server hardware, either on-premises or in the cloud.

If you look at how most TPR applications work, you will find that much of the processing involves calls to other parts of the system, whether it be databases, files or network connections to other services. These connections require the thread to wait (or block) until the database, for example, responds. The reality is that the thread spends most of its time waiting, so it is not actively using the OS thread it has been allocated.

JDK 21 introduced the Virtual Thread feature. Rather than using a one-to-one mapping between Java and OS threads, we can now have a many-to-one mapping. Multiple Java threads share a single OS thread.

For developers, migrating to virtual threads is very straightforward, only requiring a change to how the thread is created, not how it is used. When the application runs, the JVM takes responsibility for switching between the virtual threads sharing an OS (now called platform) thread. When a Java thread makes a call that will block, the JVM will record all the details of the thread’s state and switch the platform thread to a different Java thread that has work to do. The memory requirements of a virtual thread are roughly a thousand times less than a platform thread by default, so scalability can be increased massively without adding hardware.

Understanding scalability versus performance

When considering virtual threads, it is vital to understand the difference between scalability and performance. For example, the scalability of an e-commerce application determines how many users can access it at the same time. The performance of that application determines how quickly the system responds to each request. Virtual threads will potentially increase the scalability of an application, allowing more connections to be handled simultaneously. What it will not do is deliver the results to those connections more quickly. Care should also be taken about how an application processes each connection. If that connection requires CPU-intensive tasks, rather than ones that spend most of the time waiting, you could easily end up with worse scalability and connections timing out because they cannot access the shared platform thread.

To deliver better scalability and performance for your applications, you should consider a different JVM like Azul Platform Prime.

Java virtual threads and a high-performance JVM

The JDK 21 version of Platform Prime includes virtual threads but also uses a different just-in-time (JIT) compiler. The Falcon JIT compiler can produce more heavily optimised code than the OpenJDK HotSpot C2 JIT, resulting in better performance and scalability. Platform Prime fully conforms to the Java SE specification and passes all required TCK tests, making it a drop-in replacement for other JDKs. There is no need to rewrite or even recompile any code.

Why not try virtual threads on Platform Prime JDK 21 for free and see what scalability and performance improvements you get with your applications?